The OpenShift community produces a lot of interesting tutorials about how to try new solutions and configurations but unfortunately they are mostly based on a minimal setup such as MiniShift, which is definitely a cool gimmick, but badly resembles a real cluster setup. Often those posts only concentrate on the known good path about how something is supposed to function in the best case. They rarely mention how it could be debugged or fixed if it doesn’t work as expected. As all of us know, the more complex a system is, the more can go wrong and this technology is no exception especially when run in a real distributed setup. To give you some insight in how such procedures can go wrong, I’d like to share the experience I made when I tried to update my multi-master/multi-node OKD cluster. As an experienced Linux engineer or developer you might think that version updates are nothing special or exciting, but this experience will disabuse you. I hit many issues and here is how I did it.

IMPORTANT: This is not a guide how to upgrade OpenShift. It’s only a field report which is missing a lot of technical details for a successful upgrade. Please always investigate the official documentation.

Starting Position

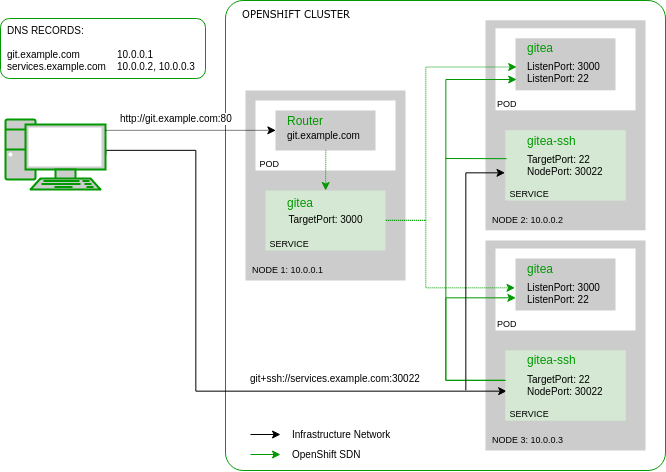

At home I run a small OKD cluster consisting of three masters, each also hosting a etcd member, and four nodes, of which there are two infrastructure nodes, hosting the routers and registry, and two compute nodes, hosting the applications. I feel that’s the minimal setup required to resemble a production-like cluster. To make the setup a bit more interesting the persistent storage is served by a container-native storage (CNS) configuration were three GlusterFS pods are distributed on the masters. This definitely deviates from how a production cluster should be setup, but as I’m running this locally at home, I don’t have enough resources available for separate storage nodes.

My masters and nodes are running atop of CentOS Atomic Host which I updated to the latest 7.1811 release just a few days ago. As identity provider for OpenShift I’m using a FreeIPA server on a separate CentOS box. Since I installed this cluster with OpenShift Origin 3.9 five months ago, it was running stable and I had a lot of fun with it. After the recently published security advisories time has come to finally take a chance to upgrade OpenShift.

What will change?

The first thing, you should always do before starting an OpenShift update, is to carefully read the release notes. I explicitly linked the Red Hat OpenShift Container Platform (OCP) release notes here, because OKD unfortunately doesn’t nicely touch up theirs. For the initial release update they are mostly congruent. Make sure to study it carefully, as this might be the primary source of information once something starts going down. For the 3.10 update, an important information is the new handling of the containerized master controller and API services. Eventually we now have a basic idea about what to expect.

Updating the Ansible Inventory

It would be nice, if there was a fool-proof command to run the update and it seems that OpenShift 4 with its Cluster Version Operator is heading there. But until then we need to carefully study and follow the official OKD 3.10 Upgrade Guide. It’s important to get the documentation for the correct release because the involved adjustments to the Ansible inventory are different from release to release. For those not knowing how OpenShift 3.x release upgrades work, it’s done via Ansible playbooks which are using the same inventory (definition on how everything will be configured) as the initial OpenShift cluster installation.

In my inventory file, I first added the Node Group assignments. E.g. the infrastructure nodes are no longer defined via openshift_node_labels variable, but via a dedicated openshift_node_group_name variable which references a node group definition from the openshift_node_groups configuration. The same changes have to be done also for the master and the compute nodes:

- OpenShift 3.9:

[infra-nodes:vars] # Set region to be dedicated for infrastructure pods openshift_node_labels={'region': 'infra', 'zone': 'default'} - OpenShift 3.10:

[infra-nodes:vars] # Set infra node group openshift_node_group_name='node-config-infra'

Note that although the openshift_node_labels variable is no longer effective, no labels will be removed during the upgrade. So if you don’t get the label definition right at the beginning you don’t have to worry that after an in-place upgrade some workload is suddenly not scheduled anymore.

I had some custom openshift_node_kubelet_args defined in my OpenShift 3.9 inventory but this variable is also not respected any longer. With 3.10 the correct way to customize the node configuration is to define a edits argument in the corresponding node group definition, which is then applied to a ConfigMap resource by the custom yedit Ansible module. While writing such a definition itself is already not super intuitive, it can only be done by re-defining the entire openshift_node_groups variable, possibly also blowing up every other node group definition if done wrong. For the moment, I chose to drop my custom node configuration entirely to make the inventory less error prone for now.

Before running the upgrade playbooks it’s also important that every manual configuration update done in the past (e.g. in the master-config.yml) has to be reflected somewhere in the Ansible inventory. Otherwise the change might be lost after the upgrade. In my setup I still had to add the LDAP authenticator to the openshift_master_identity_providers variable because I added it manually after the initial cluster installation.

The section about Special Considerations When Using Containerized GlusterFS gave me a bit of a bad feeling as my GlusterFS pods are running on the control-plane hosts. But it’s not an easy task to change that now, so I chose to still go on with the upgrade and hope for a work-around in case something should break.

Fixing a failed CNS Brick Process

Once I felt confident that my inventory was in good shape, I started the control-plane upgrade playbook placed at playbooks/byo/openshift-cluster/upgrades/v3_10/upgrade.yml. Only after a few minutes it already failed for the first time. The error message said, that my hosted registry presistent volume is not healthy. That are good news, because the playbook did properly detect my unconventional CNS setup and was even able to check the healthiness. Fortunately, this issue was already familiar to me and it was easily fixed. Here is how you do this:

- Change to the

glusterfsproject (or any custom project where you are running the GlusterFS pods) as a project or cluster administrator and query the names of the GlusterFS pods:$ oc project glusterfs Now using project "glusterfs" on server "https://openshift.example.com:8443". $ oc get pods -n glusterfs -o wide NAME READY STATUS RESTARTS AGE IP NODE glusterfs-storage-dz8qj 1/1 Running 3 2d 10.0.0.10 master01.example.com glusterfs-storage-jncsl 1/1 Running 3 1d 10.0.0.11 master02.example.com glusterfs-storage-r24wg 1/1 Running 2 11h 10.0.0.12 master03.example.com heketi-storage-1-w8c42 1/1 Running 1 6d 10.129.0.21 node02.example.com

- Connect to one of the GlusterFS pods and list the volume status. E.g.:

$ oc rsh glusterfs-storage-dz8qj sh-4.2# gluster volume status Status of volume: glusterfs-registry-volume Gluster process TCP Port RDMA Port Online Pid ------------------------------------------------------------------------------ Brick 10.0.0.11:/var/lib/heketi/mounts/vg_61 bc5a26248cc6ea9fb7ffaae4edbe93/brick_128dfe b5436dad25702e689d3d6f4b8a/brick 49152 0 Y 204 Brick 10.0.0.12:/var/lib/heketi/mounts/vg_5e cf67ed85f71cf28090d7db1acc6433/brick_d36469 4c7034276c22e264eb2576413b/brick 49152 0 N Brick 10.0.0.10:/var/lib/heketi/mounts/vg_49 e81b0f4c91942c7657e9b7ffff7834/brick_8717e0 992390a7c04431890ba56b7656/brick 49152 0 Y 224 Self-heal Daemon on localhost N/A N/A Y 215 Self-heal Daemon on 10.0.0.11 N/A N/A Y 163 Self-heal Daemon on 10.0.0.12 N/A N/A Y 172 Task Status of Volume glusterfs-registry-volume ------------------------------------------------------------------------------ There are no active volume tasks [...]According to my experience it can happen that sometimes a brick displays a

Nin theOnlinecolumn which means that the corresponding brick process wasn’t started successfully. If multiple bricks of the same volume are down, your entire volume is down and must be properly recovered. In such a case don’t continue with the steps below! - Via IP address of the brick, you can figure out which host is affected and then you can simply delete the corresponding pod:

$ oc delete pod glusterfs-storage-r24wg

The pod will be automatically restarted and the brick processes should come up this time.

Fixing the Hosted Registry Storage Definition

The second run of the control-plane playbook eventually attested that all GlusterFS volumes are healthy but again it failed only two tasks later with a rather cryptic error message, something like:

TASK [openshift_storage_glusterfs : Check for GlusterFS cluster health] **********************************************************************************************************************************************************************

task path: /usr/share/ansible/openshift-ansible/roles/openshift_storage_glusterfs/tasks/cluster_health.yml:4

Using module file /usr/share/ansible/openshift-ansible/roles/lib_utils/library/glusterfs_check_containerized.py

FAILED - RETRYING: Check for GlusterFS cluster health (120 retries left).Result was: {

"attempts": 1,

"changed": false,

"invocation": {

"module_args": {

"cluster_name": "registry",

"exclude_node": "master01.example.com",

"oc_bin": "/usr/local/bin/oc",

"oc_conf": "/etc/origin/master/admin.kubeconfig",

"oc_namespace": "default"

}

Didn’t it just said, that all the GlusterFS volumes are healthy? What the heck is "cluster_name": "registry" and what is it doing with GlusterFS in the ‘default’ namespace anyway?

The solution for this, I found after digging deep in the openshift_storage_glusterfs Ansible role and reading the CNS installation instructions again and again. I became a victim of my “simplified” CNS setup. The reference installation is meant to have two separate CNS GlusterFS clusters. One exclusively for the hosted registry volume (hinted by the [glusterfs_registry] Ansible host group) and a second cluster for any other persistent volumes (hinted by the [glusterfs] Ansible host group). As mentioned before, I’m limited in available hosts so I added the master hosts to both host groups and set the glusterfs_devices variable to the same device when installing the CNS. That’s already everything that was needed to create the hosted registry volume with OpenShift 3.9 in the “regular” CNS cluster. However the 3.10 playbook expects the registry volume to be in a different project with a different naming. Fortunately all that was needed to fix this were some additional inventory variables in the [OSEv3:vars]:

# Adjust variables for registry storage to match default converged glusterfs storage setup openshift_storage_glusterfs_registry_name=storage openshift_storage_glusterfs_registry_namespace=glusterfs

With the updated inventory I started the control-plane upgrade playbook once more. This time it ran for quite a while and even started to do some real stuff. It replaced the docker run command in the ‘origin-node’ systemd service with a runc command using the 3.10 image. Finally some progress. But eventually another error aborted the playbook and again it was a totally unexpected one.

etcd Backup Failure

Before updating the etcd cluster, there is a task which would backup the etcd database and this failed miserably. It couldn’t run docker exec etcd_container etcdctl backup [...]. When executing the command manually on a master host, I received the same error message:

rpc error: code = 2 desc = oci runtime error: exec failed: container_linux.go:247: starting container process caused "process_linux.go:110: decoding init error from pipe caused \"read parent: connection reset by peer\""

My first suspicion was the Ansible role. Maybe the backup command is wrong? But I couldn’t find any radical changes in the commit history regarding the etcd backup and a blocking issue like this is unlikely to stay unnoticed for such a long time. Maybe something wrong with the image? The OpenShift Origin 3.9 setup was using a rather atypical image at that time, the only one from the Fedora image registry (registry.fedoraproject.org/latest/etcd:latest). When I see the ‘latest’ tag being used with containers I’m instantly suspicious that bugs might sneak in unnoticed as different users may get different images depending on when they are pulling them. Maybe they mistakenly pushed an image without a shell or without the etcdctl binary? So I tried to ask in the #openshift IRC channel on Freenode if someone experienced the same issue before but didn’t get any reply. Suddenly I had an idea: Only a few hours before I was using the etcdctl tool from the Atomic host to do my own etcd backup. I just need to find a way to make Ansible use the etcdctl from the host and everything would be fine. So I was digging a bit in the Ansible etcd role and a few minutes later I set r_etcd_common_etcdctl_command to "etcdctl" in my inventory, being confident that this would fix my issue. It won’t, but I won’t find out anytime soon…

The Master API cannot find the LDAP CA Certificate

In the next attempt, the playbook happily ran the etcd backup, upgraded the etcd images, converted them to be a static pod on all masters and did the same for the other two control plane services, the API service and the controller service, starting on the first master. Eventually the ‘origin-master-api’ and ‘origin-master-controller’ services were shutdown and the corresponding pods should be started, so the playbook was waiting for the API service to come up and waited and waited… The pod didn’t come up. Hmpf. Time has already come to use the new debugging command I read about in the release notes to see what’s going on:

# /usr/local/bin/master-logs api api

That is an alternative for the corresponding oc command that I’m also able to run from my client machine:

$ oc logs master-api-node01.example.com -n kube-system

But the latter one was behaving weird. Sometimes it hung although the API services of the two other nodes were still up. There is definitely something wrong.

When checking the logs locally, I saw an error that my FreeIPA CA certificate which should be used to validate the LDAPS connections cannot be found. That’s strange. I explicitly configured the ca key in the openshift_master_identity_providers variable pointing it to the correct CA certificate. I did this in other OpenShift cluster inventories before and there it was working… But those were not running OpenShift 3.10 or later. With 3.10 the playbook developers removed the possibility to custom-name the CA certificate so the ca key from the inventory was silently ignored. Only after checking the installation instructions regarding Configuring identity providers with Ansible, I found an inconspicuous comment that the CA certificate destination path now follows a given naming convention. When I was adding the identity provider configuration to the inventory before the update, I didn’t specify the openshift_master_openid_ca or openshift_master_openid_ca_file variables which will ensure that the CA certificate is copied to the correct place. After all the certificates were already on the master hosts and the identity provider was working, so I didn’t want the upgrade playbook to touch the certificate. Now that’s the result: My mistake. Still, I like issues that are clear and can be fixed so easily. A quick rename of the certificate on the master hosts made the API service successfully start again.

How a Docker Bug broke the etcd Cluster

All API servers are running again, although only the first one in the final configuration, but the oc command invocation still feel sluggish. Sometimes it even hangs completely. When checking the process list it attracted my attention that the etcd processes are only a few minutes old and sometimes they are not running at all. So I was checking the etcd cluster health and here it is: Two cluster members are down and one is in the state unhealthy. That is bad… Immediately, I started manually triggering etcd restarts. But only a few minutes later they shutdown again. I was checking the log files and there were errors, but I couldn’t figure out a single reason what might cause this mess. Then I found that the /etc/etcd/etcd.conf was updated during the playbook run, so I restored the backup, but again it wouldn’t fix the issue. Eventually I started to accept the thought that I might need to completely restore the etcd database from a backup because the database might already be so corrupt in the mean time that it is not able to find a stable state anymore.

The OKD documentation for Restoring etcd quorum would be the correct guide that you need to follow in this situation, but for a reason I landed at Restoring etcd. That confronted me with yet another issue: This guide was not yet properly updated for OpenShift 3.10. Some parts of the documentation still reference etcd as systemd service. But in my setup it’s a pod. Trying to pass the --force-new-cluster parameter to the etcd process via systemd override obviously doesn’t have any effect. Eventually I found out about the /etc/origin/node/pod/etcd.yaml file which contains the pod definition. And here we are able to correctly pass the parameter so that it is picked up by the pod startup command. But again, even with an empty database, a few minutes later my pod would die again. Something is badly broken here. In the YAML definition I also found the liveness probe. So once the pod was started once more, I tried to execute the liveness probe to see what it returns and the result looked familiar, in a bad way:

rpc error: code = 2 desc = oci runtime error: exec failed: container_linux.go:247: starting container process caused "process_linux.go:110: decoding init error from pipe caused \"read parent: connection reset by peer\""

Ouch! Now I understand why the pods keep restarting. It’s again the same error that also caused the etcd backup failure before. But now I’m using the new quay.io/coreos/etcd:v3.2.22 image. This disproved my theory that a buggy image might be the reason. For the moment, I ran out of ideas… Until I remembered that I recently read a post about a docker bug (#1655214) that affected CentOS 7. Thanks for that! After checking the docker version on Atomic host 7.1811 (docker-1.13.1-84.git07f3374.el7.centos) it’s confirmed. That’s the root cause for so much trouble so far.

Updating Docker on Atomic Host

I didn’t need to dig too much into Atomic Host so far, as most of the stuff was simply working or was easily fixed with an update in the past. But this time it didn’t look that there is an imminent update. Release 7.1811 was only a few days old. I could roll back, but the previous version is 7.1808. That’s three months back and somehow defeats the purpose of my update, to get the latest security fixes. Fortunately CentOS already released new docker packages where this bug is fixed. Now I just need to find a way to update the docker packages independently from the ostree image? This time the documentation gods were on my side. I quickly found Dusty Mabe’s Atomic Host 101 Lab Part 4: Package Layering, Experimental Features.

Here my guide for quickly working around Bug #1655214 by updating the docker packages to release 1.13.1-88.git07f3374.el7.centos on CentOS Atomic Host:

- Create a temporary directory and download the corresponding RPM packages from a mirror of your choice:

# mkdir /tmp/docker-1.13.1-88 # cd /tmp/docker-1.13.1-88 # for pkg in docker docker-client docker-common docker-lvm-plugin docker-novolume-plugin ; do \ curl -O https://mirror.init7.net/centos/7/extras/x86_64/Packages/$pkg-1.13.1-88.git07f3374.el7.centos.x86_64.rpm ; \ done - From within the directory run

rpm-ostree override replaceto replace the docker packages from the ostree layer with the new RPMs:# rpm-ostree override replace docker* Checking out tree ee5a6f2... done Inactive requests: docker (already provided by docker-2:1.13.1-84.git07f3374.el7.centos.x86_64) Enabled rpm-md repositories: base updates extras Updating metadata for 'base': [=============] 100% rpm-md repo 'base'; generated: 2018-11-25 16:00:34 Updating metadata for 'updates': [=============] 100% rpm-md repo 'updates'; generated: 2018-12-10 15:34:27 Updating metadata for 'extras': [=============] 100% rpm-md repo 'extras'; generated: 2018-12-10 16:00:03 Importing metadata [=============] 100% Resolving dependencies... done Relabeling (5/5) [=============] 100% Applying 5 overrides Processing packages (10/10) [=============] 100% Running pre scripts... 1 done Running post scripts... 5 done Writing rpmdb... done Writing OSTree commit... done Copying /etc changes: 42 modified, 5 removed, 613 added Transaction complete; bootconfig swap: no; deployment count change: 0 Freed: 580.5 kB (pkgcache branches: 0) Upgraded: docker 2:1.13.1-84.git07f3374.el7.centos -> 2:1.13.1-88.git07f3374.el7.centos docker-client 2:1.13.1-84.git07f3374.el7.centos -> 2:1.13.1-88.git07f3374.el7.centos docker-common 2:1.13.1-84.git07f3374.el7.centos -> 2:1.13.1-88.git07f3374.el7.centos docker-lvm-plugin 2:1.13.1-84.git07f3374.el7.centos -> 2:1.13.1-88.git07f3374.el7.centos docker-novolume-plugin 2:1.13.1-84.git07f3374.el7.centos -> 2:1.13.1-88.git07f3374.el7.centos Run "systemctl reboot" to start a reboot

- Reboot the host.

I carefully did this on one master server after the other and surprisingly all the services (except etcd) were started normally. Even my GlusterFS pods came up again as nothing had happened. But still, the etcd cluster was offline and with it the Master API was inaccessible. No oc commands were possible.

Fixing the etcd Cluster

With the docker issue being fixed, I now had to bring up the etcd cluster again. The database was likely in a confused state because of all the failed attempts before, so I decided to restore a known good state. As briefly mentioned before, to do so, you actually create a new cluster with the database from a backup. Because the OpenShift documentation on how to do this cannot be followed easily, I list the exact steps below how I manged to do it:

- Make sure that all the etcd processes are down and not coming up again automatically. On an OpenShift 3.10 cluster, you prevent automatic startup by moving the

/etc/origin/node/pod/etcd.yamldefinition to a backup location e.g./etc/origin/node/pod/disabled/on every etcd host. - First create a new one node etcd cluster on the first etcd host. To do so, we need some preparation:

- The

/etc/etcd/etcd.confconfiguration must not contain any previous configurations regarding theINITIAL_CLUSTERorINITIAL_CLUSTER_STATE. I was able to simply use theetcd.confgenerated by the upgrade playbook which already set those two variables to the correct value:ETCD_INITIAL_CLUSTER= ETCD_INITIAL_CLUSTER_STATE=new

Also make sure, the

ETCD_INITIAL_ADVERTISE_PEER_URLSonly contains the URL of the first host itself and no other peers:ETCD_INITIAL_ADVERTISE_PEER_URLS=https://10.0.0.10:2380

- Restore the etcd database from a backup. Fortunately the upgrade playbook automatically created a backup after the etcd upgrade, so I’m going to restore to that state:

# mv /var/lib/etcd/member /var/lib/etcd/member.orig # cp -rP /var/lib/etcd/openshift-backup-post-3.0-20181214022846/member /var/lib/etcd/

- When starting the first etcd member for the first time, we need to pass the

--force-new-clusterargument to the process. This will override the cluster definition from the database files. To do so, theetcd.yamlfile has to be adjusted. Here the important snippet (everything else should be kept as it is):spec: containers: - args: - '#!/bin/sh set -o allexport source /etc/etcd/etcd.conf exec etcd --force-new-cluster ' - If everything is ready to start the etcd process, move the altered

etcd.yamlfile back to the/etc/origin/node/poddirectory. Within a few moments, the pod should startup and create a new cluster.

- The

- Check the initial cluster state via:

# etcdctl2 cluster-health member 67aa8b8cc701 is healthy: got healthy result from https://10.0.0.10:2379

If something went wrong, you might want to check the logs via:

# /usr/local/bin/master-logs etcd etcd

- Initially the first member still advertises a

PeerURLpointing to ‘localhost’:# etcdctl2 member list 67aa8b8cc701: name=master01.example.com peerURLs=http://localhost:2380 clientURLs=https://10.0.0.10:2379 isLeader=true

This must be updated by the correct host URL pointing to itself:

# etcdctl2 member update 67aa8b8cc701 https://10.0.0.10:2380 Updated member with ID 67aa8b8cc701 in cluster

Then it correctly shows:

# etcdctl2 member list 67aa8b8cc701: name=master01.example.com peerURLs=https://10.0.0.10:2380 clientURLs=https://10.0.0.10:2379 isLeader=true

- This configuration was automatically saved in the database. So the

--force-new-clusterargument can be removed again. Edit theetcd.yamlin-place to restore the original configuration. After doing so, restart the etcd process with:# /usr/local/bin/master-restart etcd

If it comes up again and shows healthy, we can continue the add the other two cluster members.

- The following steps to add another cluster member obviously have to be done on for both other etcd hosts:

- Add the new host to the cluster by executing the following command on the first etcd host:

# etcdctl2 member add master02.example.com https://10.0.0.11:2380 Added member name master02.example.com with ID a6b2e8d0d392083b to cluster ETCD_NAME="member02.example.com" ETCD_INITIAL_CLUSTER="member01.example.com=https://10.0.0.10:2380,member02.example.com=https://10.0.0.11:2380" ETCD_INITIAL_CLUSTER_STATE="existing"

The new member will then be displayed as ‘unstarted’ in the member list.

- Prepare the

/etc/etcd/etcd.conffile on the new etcd host by defining the variables as shown in the output of theetcdctl2 member addcommand above. TheETCD_INITIAL_CLUSTERvalue will automatically be extended with each new member added to the cluster. - Delete the old database on the new etcd host. It will automatically be synced from the other cluster members once the new node has joined:

# mv /var/lib/etcd/member /var/lib/etcd/member.orig

- Enable the etcd pod by moving the

etcd.yamlback to/etc/origin/node/pod. Within a few minutes the etcd process should be started and eventually join the etcd cluster.

- Add the new host to the cluster by executing the following command on the first etcd host:

Once the etcd cluster was restored, the oc command was finally working again and I could check the state of the etcd pods also via OpenShift client:

$ oc get pods -n kube-system | grep etcd master-etcd-master01.example.com 1/1 Running 5 1h master-etcd-master02.example.com 1/1 Running 0 47m master-etcd-master03.example.com 1/1 Running 0 2m

During the entire time the etcd cluster was down the OpenShift cluster continued running. The registry, routers and applications such as my Gitea setup were online all the time and even the CNS cluster running on the master hosts handled the debugging and restart session with bravery. Fortunately I had a super static setup during that time and so no deployments or replica count enforcement needed to be executed which would have been impossible anyway. Still I feel it’s a positive fact that shows the resiliency the platform has gained over time.

Finishing the Control Plane Upgrade

After a longer detour, I was finally back at the point were I could start another run of the control plane upgrade playbook. Remember, when the playbook aborted before it did so after upgrading the control plane services on the first master node, there are still two to go. So I started the playbook once again.

By now I have a really good feeling about the state of the playbook in this release. As you can see above, it failed on me many times in all different stages of the update, but it always had a good reason and it was always able to pick up where it left. My experience with initial upgrade attempts of earlier OpenShift releases was unfortunately not always that good. For example it happened to me that I had to restore a master host from a snapshot, because the playbook failed to correctly detect the upgrade state in the second run, after it aborted the first run due to a syntax error in a post-upgrade task.

This time the playbook finished successfully and my control plane was finally at release 3.10:

# /usr/local/bin/oc version oc v3.10.0+c99b16a-90 kubernetes v1.10.0+b81c8f8 features: Basic-Auth GSSAPI Kerberos SPNEGO Server https://openshift.example.com:8443 openshift v3.10.0+c99b16a-90 kubernetes v1.10.0+b81c8f8

Running the Node Upgrade Playbook

After the control-plane was done, I had to upgrade the infrastructure and compute nodes. A separate playbook placed at playbooks/byo/openshift-cluster/upgrades/v3_10/upgrade_nodes.yml is available. Initially I only wanted to run it on a single node to make sure everything works as expected. This can be done by passing the -e openshift_upgrade_nodes_label=kubernetes.io/hostname=node03.example.com argument, where the given host name is obviously the node that should be upgraded, to the playbook execution command. The playbook completed without error already on the first attempt. So I continued with the other nodes.

One fact is super important when upgrading the nodes to OpenShift 3.10. The /etc/origin/node/node-config.yaml is completely regenerated based on the settings in the corresponding node group (and/or the defaults) and so any prior adjustment not reflected in the inventory is lost. Therefore make sure that you perfectly understand the Node Group concept and how it affects your node layout and configuration.

To give you an example how to customize the upgrade behavior on the infrastructure nodes, I added the following arguments to the playbook execution: -e openshift_upgrade_nodes_label=region=infra -e openshift_upgrade_nodes_serial=50%.

Fixing the Infrastructure Node Selector

It confused me that the NodeSelector of the infrastructure components such as the registry and routers were not updated to the new defaults. In the inventory I explicitly defined the new node selector:

openshift_hosted_registry_selector='node-role.kubernetes.io/infra=true'

But when checking the DeploymentConfig of the registry, I can still find the old NodeSelector:

$ oc get dc docker-registry -n default -o json | jq .spec.template.spec.nodeSelector

{

"region": "infra"

}

So I manually triggered an update of the NodeSelector property in all the DeploymentConfigs using it. E.g.:

$ oc patch dc docker-registry -n default --type json --patch '[{"op":"replace","path":"/spec/template/spec/nodeSelector","value":{"node-role.kubernetes.io/infra":"true"}}]'

deploymentconfig "docker-registry" patched

NodeSelectors can also be set in DaemonSets, as annotations in projects or even globally via master-config.yaml. Therefore make sure to update them all, when required, before removing any labels from the nodes.

After checking that all the pods are up and running again, I was finally able to remove the old infrastructure labels from the nodes:

$ oc label node node01.example.com region- zone-

Summarizing

This was not my first OpenShift update ever, but my first update from 3.9 to 3.10. This obviously means that I made some mistakes and had wrong assumptions from which I did learn a lot. I hope I could share some insights and useful hints for those of you that haven’t done this before. Otherwise it will at least help me in the future to run this update an other cluster much smoother.

At the end some advice for those of you who also need to do such an upgrade:

- You need to have a test cluster where you can practice such updates. It doesn’t need to be big but the Ansible inventory variables should be structurally as similar as possible to those of the production cluster. As you saw above, a lot of errors just happened due to wrong inventory variables. Ideally the test cluster should have some workload so that you experience how the applications behave during the update and and so that you can test if everything still works after an upgrade.

- Emphasis your Ansible inventory. Everything of your configuration that can fit into the Ansible inventory must be defined there and must be maintained there. It can cost you a lot of time debugging or even result in application downtime during an upgrade if you manually updated the cluster configuration without adjusting the configuration in the inventory. Even when it sometimes feels like it’s more work than benefit it’s always worth it.

- Preparation is key. Carefully read through the upstream documentation available. Most likely you also have some internal documentation where your infrastructure specifics are written down. Run the upgrade on a test cluster before you do it in production. If it doesn’t work on the first attempt, update your notes and try it again. Try to gain as much experience as possible on the test infrastructure so that you already know what to do if something goes wrong in production.

- Plan a lot of time. Doing such an upgrade is a lot of work! Give yourself enough time to do a proper preparation and also the actual upgrade window itself should give you enough time to fix issues when they arise. Plan in the scale of hours or better days. Ansible is slow. If you have to restart the playbook because of an error after 15 minutes this will eat up your time fast.

Thanks for reading. As always I’d welcome some feedback or critics in the comments.